We looked at AMD’s Zen 4 from a software perspective in a few articles. Thanks to Fritzchens Fritz, we have very high resolution die photos of AMD’s Zen 4 and prior Zen generations. That gives us a cool opportunity to look at Zen 4 from a physical perspective.

Zen 4’s CCD

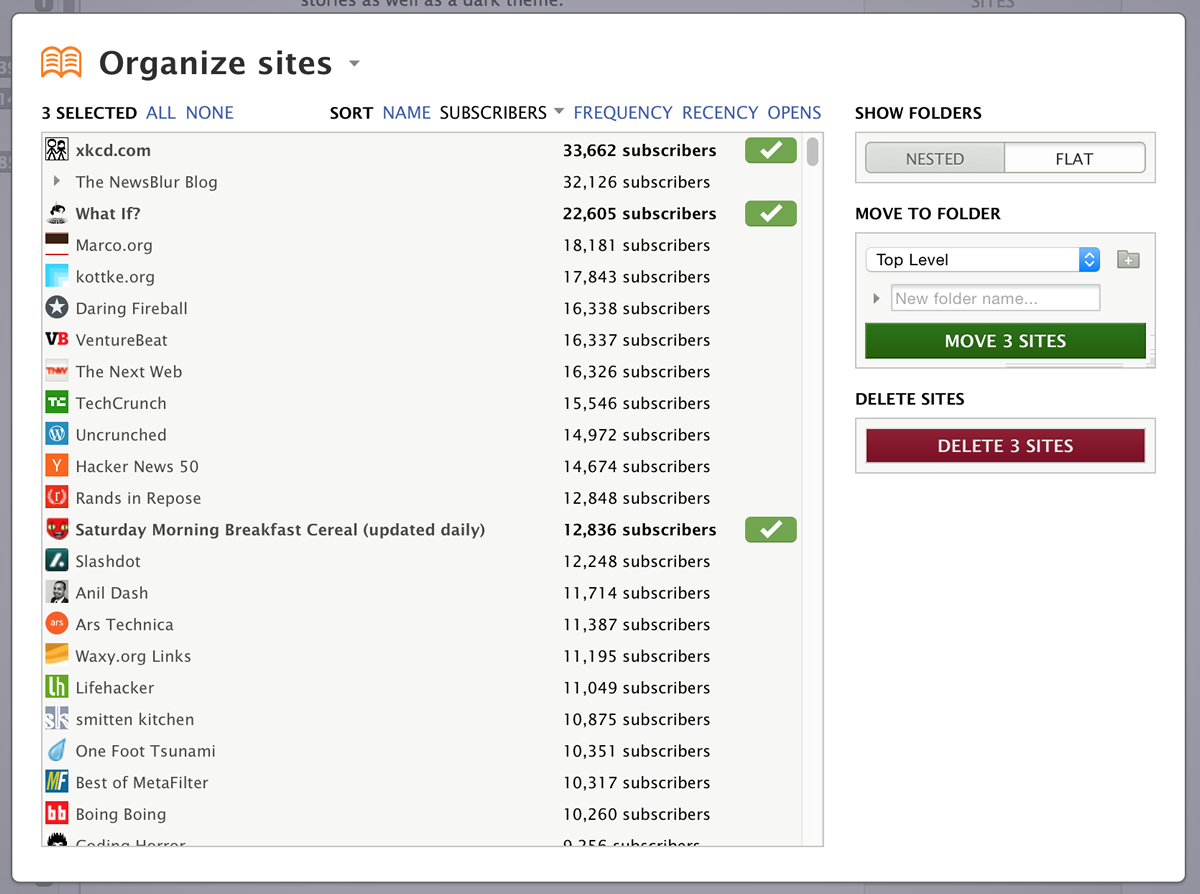

Zen 4 uses a chiplet strategy, carried over from Zen 2 and Zen 3. A Core Complex Die (CCD) contains eight cores and L3 cache. CCDs are connected to an IO die with IFOP (Infinity Fabric On-Package) links. The IO die houses control logic and interfaces for DRAM, PCIe, and other IO. With this strategy, AMD can use the most advanced and expensive process nodes on the CCDs where it can make the most impact, while using a cheaper node for the IO die.

On Zen 4, the CCDs are manufactured with TSMC’s 5 nm process. The IO die uses TSMC’s 6 nm node, which is derived from TSMC’s older 7 nm process. Thanks to the new node, Zen 4’s CCD is substantially smaller at 67 mm2, compared to Zen 2’s 75.75 mm2 CCD and Zen 3’s 83.736 mm2. This is quite an impressive reduction in CCD footprint, because the Zen 4 cores have doubled L2 capacity and larger core structures.

AMD also changed the CCD layout between generations. Evidently, their engineers have been tweaking their approach as they figure out what works best. Zen 4’s CCD looks very similar to Zen 3’s in most respects, with an IFOP placed at the bottom alongside the SMU and some debug/test logic.

Some moves are dictated by changing demands. Moving from DDR4 to DDR5 meant more bandwidth between the CCDs and IO die, but IO doesn’t scale well with process node shrinks. Therefore, AMD took the 32 transmit and receive links from Zen 2/3’s CCD and split them into two faster links. Each of Zen 4’s IFOP links has 16 transmit and 20 receive links, likely with transfer rate doubled per link. A CCD with both IFOPs connected can read data from DRAM at 64 bytes per cycle and write 32 bytes per cycle. So far, this capability is only fully utilized on certain Epyc SKUs.

To summarize changes across three Zen generations:

| Generation | L3 Cache | IFOP Placement | SMU and Debug/Test |

| Zen 4 | Similar to Zen 3 | Moved to the right. Split into two links | Moved to one side of the IFOP |

| Zen 3 | Unified across eight cores | Moved to bottom of CCD to reduce package trace length | Moved to bottom alongside IFOP |

| Zen 2 | Split between two quad core CCX-es | Between CCX-es to reduce average distance from any L3 slice to the IFOP | Placed on both sides of the IFOP |

AMD has heavily emphasized L3 performance and capacity ever since they’ve gone for a chiplet setup with an IO die. Going through the IO die increases DRAM access latency, and better caching offsets that. Zen 2 and Zen 3’s CCDs both spent slightly more area on L3 cache than they did on the cores.

Zen 4 reverses this trend, though the situation is more complex because we’re counting Zen 4’s larger L2 as part of core area. L3 cache capacity remains the same, moving the ratio back in the core direction.

The Zen 4 Core

AMD and Intel’s core sizes follow a zig-zag pattern across generations. Cores get smaller as improved process nodes allow more functionality in the same space. Then they get larger as engineers improve the core on the same node, though in fairness AMD tends to make large architectural changes even when doing a node shrink.

At just 3.77 mm2, Zen 4 is on the small side. It’s a bit larger than Zen 2. But just as with CCD area, Zen 4’s doubled L2 cache capacity makes a big difference. Subtracting L2 area from both Zen 2 and Zen 4 would make Zen 4 slightly smaller at 2.73 mm2 compared to Zen 2’s 2.83 mm2.

L2 Cache SRAM

Let’s take a closer look at the L2 because it’s a significant change over prior Zen generations. AMD has used a 512 KB, 12 cycle L2 with 32 bytes/cycle of bandwidth since Zen 1, with a small exception for first generation Zen 1 desktop parts that had 17 cycle latency. Zen 4 increases L2 capacity to 1 MB, with latency increasing to 14 cycles.

Like most CPU caches, the L2 is built from SRAM. SRAM, or static random access memory, is a fast and low power way to store data. Compared to the DRAM (dynamic random access memory) typically used to provide gigabytes of main memory, SRAM suffers from lower density, so it’s often used to store frequently used data close to the core. SRAM blocks are very regular, often with clear decode sections next to dense arrays of SRAM cells. That makes them easy to identify. Improved process nodes tend to provide better SRAM density, letting CPU designers keep more data close to the core.

High-Speed Computing and Mobile Applications” paper in ISSCC 2018

TSMC’s 5 nm node enables Zen 4’s large L2 with density improvements. Zen 4 implements its 1 MB L2 with 128 SRAM blocks, so each block covers 8 KB of L2 capacity. Earlier Zen generations use 8 KB blocks as well, allowing for direct density comparisons.

Zen 4 uses just 62% of Zen 3’s area to store the same 8 KB of L2 data. The whole L2 complex on Zen 4 takes just 21% more area than on Zen 3, while providing 100% more caching capacity. Compared to Intel’s Raptor Lake, Zen 4’s per-area caching efficiency is nearly identical. Raptor Lake’s L2 cache offers twice as much capacity and bandwidth as Zen 4’s, but with higher 16 cycle latency. Zen 4’s L2 has 14 cycle latency, while earlier Zen generations had 12 cycle L2 latency.

L2 area doesn’t just consist of data storage. Caches need tags to track what data they have cached. AMD uses different SRAM types for tag and data SRAMs, because tag storage has to be faster than data storage. Checking for a hit involves comparing tags for eight lines in a set. In contrast, only the matching way (if any) in data storage has to be read out. Each Zen 4 tag SRAM block occupies 69% of the area of a Zen 3 tag block, making for a nice density increase.

Estimating storage density in the L2 tag array is a bit harder, but we can do some napkin math. Zen 4 uses 52-bit physical addresses (PA), but we don’t need to store the whole PA as a tag. Zen 4’s L2 cache is 8-way set associative and uses 64B lines, so there are 256 sets. We need 8 bits to select one of those 256 sets. The low 6 bits don’t matter because caches are addressed at 64 byte cacheline granularity. That leaves us with 38 PA bits required for the tag. Multiplying that by 16384 lines gives us 77824 bytes (or 76 KB) of tags to cover the L2 cache.

Zen 4’s L2 cache has 80 blocks in the tag region. Each block needs 0.95 KB of storage for tags. I’m guessing actual capacity is 1 KB. From the PPR, we know that the L2 tags are ECC protected. Tag SRAMs are also a logical place to track a line’s state, like whether it’s modified or shared. ECC protection and state storage would both require a few extra bits.

AMD also stores additional metadata to let the cache kick out the least recently used data when bringing new data into the cache. These LRU bits received a layout change compared to prior Zen generations:

Because the Zen 4 core is shorter, the LRU bits got moved to spaces between the tag SRAMs.

After data is retrieved from L2 or beyond, it’s filled into the core’s L1 data or instruction caches. Like Zen 2 and Zen 3, Zen 4 has rather long datapaths from L2 to its L1 caches.

Zen 4 Core, Main Portion

Core labeling can involve a lot of guesswork, especially when there’s a lot of synthesized logic that just looks like a blob of mysterious goo. Thankfully, AMD gave a high level overview of core components in ISSCC:

We can do a quick labeling of the Fritzchens Fritz’s Zen 4 die photo from that slide, as well as the L2 slide above. The L2 cache and floating point unit are the largest parts of the core, and consume about 20.28% and 17.67% of core area respectively. They’re placed on the left and right sides of the core, flanking the main part of the core.

Within the center part of the core, Zen 4 has a large branch predictor that occupies about 10.6% of core area. Next up is the load/store unit, which comes in at 10.9% of core area. That figure includes the 32 KB L1 data cache and L2 TLB. After that, Zen 4’s frontend consumes 9.77% of core area, including the instruction cache and micro-op cache. Zen 4’s integer execution engine is last in line. The area AMD labeled as “Int ALU” only accounts for 3.05% of core area, while the scheduler takes up 2.53%. Therefore, the whole integer execution cluster takes up a mere 5.58% of the core.

Identifying Core SRAM Blocks

We can try to label the core with better granularity. The large blocks of SRAM within the decode and instruction cache portions are almost certainly data storage for the micro-op cache and instruction cache. Within the DCache and Load/Store section, the block of SRAM closest to the floating point unit is the L1 data cache.

Other SRAM blocks are more difficult to label. However, many of them use the same type of SRAM as the L1 instruction cache, which we know has 32 KB of capacity. With that knowledge, we can estimate the capacity of other SRAM blocks. Specifically, we’re looking for:

| Structure | Expected Size | Napkin Math | Comments |

| L1 tags | 2560 bytes (2.5 KB) | 512 lines, 8-way = 64 sets A 52-bit PA is split into 6 index, 6 offset, and 40 tag bits 40 bits * 512 lines = 20480 bits, or 2560 tag bytes | One calculation works for both the L1 data and instruction caches, because they have the same geometry (32 KB 8-way) |

| L1 DTLB: 72 entry fully associative | 765 bytes | 45-bit VA 40-bit PA per entry. 6120 bits, 765 bytes | 12 bits used for offset into page. A fully associative cache won’t use any address bits to index into it, because there’s basically one set |

| L2 DTLB: 3072 entry 24-way | 30 KB | (38-bit VA tag + 40-bit PA) * 3072 / 8 bits per byte = 39952 bytes | 128 sets, so 7 index bits 12 bits used for offset into page That leaves 57-7-12 = 38 tag bits |

| L1 iTLB: 64 entry fully associative | 680 bytes | (45+40)*64/8 = 5440 bits / 8 bits per byte = 680 | Similar to L1 DTLB calculation but with fewer entries |

| L2 iTLB: 512 entry 8-way | 5 KB | (39+40)*512/8 = 5056 bytes | 64 sets need 6 index bits, so the VA tag needs 39 bits another 12 bits don’t need to be tracked because they’re the offset into the page |

Zen 4’s L1 instruction and data TLBs are probably too small to see. They don’t have a lot of entries and occupy less than 1 KB of storage. For comparison, Zen 4’s load and store queues have more entries, and the store queue would have larger entry sizes from having to hold pending store data.

Zen 4’s branch predictor has a staggering 152 KB of SRAM capacity. Alone, it accounts for nearly half of core SRAM usage, and can store more bits than the L1 data cache, L1 instruction cache, and micro-op cache combined. It includes history storage that tracks how branch behavior correlates with past branches, and branch target buffers that remember where branches went. Trying to calculate storage required for these structures is an exercise in futility, but we can approximate indirect target array size thanks to AMD’s documentation.

Only a limited number of indirect targets that cross a 64MB aligned boundary relative to the branch address can be tracked in the indirect target predictor.

Software Optimization Guide for the AMD Zen 4 Microarchitecture

Loosely translated, most of the indirect target array’s 3072 entries only store the low 26 bits of the branch target. The indirect target array would use just under 10 KB of storage.

Process node improvements helped to enable Zen 4’s larger SRAM-based structures. Compared to Zen 2 and Zen 3, Zen 4’s L1i SRAM blocks only take 68.8% as much area.

Dataflow-Based Guessing

Past the SRAM blocks, other core components are difficult to label. But we can make educated guesses because blocks that frequently interact with each other are likely to be adjacent. Let’s start with the frontend.

The frontend’s branch predictor is effectively the first stage in the CPU’s pipeline, and feeds fetch addresses to the frontend. Fetch addresses are then looked up in either the micro-op cache or instruction cache. Taken branches’ target addresses can get looked up in both, and can switch the frontend to taking operations from the micro-op cache.

In fetch/decode mode, instruction bytes are brought from the L1 instruction cache and translated into micro-ops. A queue of fetched instruction bytes likely sits between the micro-op cache and instruction cache, and helps to smooth out spikes in instruction bandwidth demand. Zen 4’s optimization guide states that the queue has 24 entries, each of which holds 16 bytes for 384 bytes of queue capacity.

Zen 4’s four-wide decoder probably sits in the corner of the central core area. There, it would have quick access to the instruction byte queue and microcode ROM. Microcode is used for more complex x86 instructions, like REP-prefixed fast string copies. The microcode sequencer also has a few blocks of SRAM. That’s likely patch RAM, used to fix bugs or vulnerabilities discovered after the core has been released.

Micro-ops from the decoders are then filled into the micro-op cache, and sent to a micro-op queue in front of the renamer. Unlike Intel, AMD will cache microcode ops in the op cache. But like Intel, AMD likely has this micro-op queue double as a loop buffer. From microbenchmarking and poking performance counters, the queue seems to have 144 entries. This queue is probably just above the decoders, where it can be easily fed by either the micro-op cache or decoders. The renamer likely sits just above that, where it’s adjacent to both the FPU and integer scheduler. That lets it allocate resources and send micro-ops with renamed registers to either side.

Scheduling and Execution

Micro-ops sent to the integer side end up in the scheduler. The scheduler is adjacent to both the integer ALUs and the load/store unit, minimizing distances for the critical schedule-execute loop. The load/store unit’s proximity to the scheduler suggests AMD could let it directly wake up micro-ops in the scheduler, cutting down load-to-use latency. The integer ALU section almost certainly includes the integer register file. There are two regular looking areas that could be the register file, but it’s hard to tell for certain.

On the floating point and vector side, the two large regular areas are the register files. Operands will have to move from right to left to the execution units. Prior Zen generations reduced register file to execution unit distances by placing the execution units on both sides of the register file. Zen 4’s new arrangement increases distance between the register files and some execution units, but places the register files a bit closer to the integer side and data cache. That could reduce power consumption and allow for higher clocks when using vector operations to move memory around. It could also help with data movement between the FP and integer side.

With that, here’s a labeled photo of the Zen 4 die, including the datapath-based guessing from above:

L3 Cache

Zen 4’s L3 cache consumes considerable area and plays a major role in the design. It insulates the cores from the relatively slow cross-chiplet interconnect and features more per-core cache capacity than Intel’s designs. AMD has improved L3 caching density with each Zen generation. Zen 2 and Zen 3 used the same process, but a switch to high density SRAM reduced area usage.

Zen 4 takes a process node shrink and continues the trend. A 16 KB block of L3 SRAM only takes 82.5% of the area it did on Zen 3, or 68% compared to Zen 2. Looking at an entire L3 slice shows a much larger area decrease, thanks to incredible tag density and a much denser TSV implementation.

AMD achieved this by using high density SRAM bitcells for L3 tags. Tags need high performance storage because every L3 access will check a set of 16 tags (the L3 is 16-way set associative) to determine if there’s a hit. Furthermore, L3 tag comparison latency affects both L3 hit and miss latency. Trading area for better tag performance and power makes sense, and AMD did so on Zen 2 and Zen 3. However, TSMC’s 5 nm process was able to provide enough performance and power efficiency with high density SRAM, even when used as tags.

SRAM density improvements have slowed down, but cache area efficiency continues to improve as higher density SRAM is able to take on more demanding roles like tag storage. Caches aren’t entirely SRAM either. Logic density improvements help make the cache controller smaller. In the end, Zen 4’s 4 MB L3 slice takes just 66.4% of Zen 3’s area while retaining VCache compatibility.

VCache Implementation

Like Zen 3, Zen 4’s CCD supports a stacked cache die that can increase L3 capacity to 96 MB. Zen 3 placed the cache die over L3 area, but Zen 4’s smaller CCD meant the VCache die now covers all of the L2 cache and a small part of the core. Zen 4 still avoids placing cache over hotter parts of the core like the schedulers and execution units.

Although the VCache die packs twice as much data caching capacity, SRAM density is similar. Instead, the VCache die achieves its high caching density by dedicating more area to SRAM. Besides overlapping the L2 cache and part of the core, each 8 MB VCache slice also covers control logic in the center of the die.

Finally, the cache die only has data and SRAM tags. The base die has to make room for L3 cache controllers and L2 shadow tags.

Final Words

Higher density from TSMC’s 5 nm process contributes to Zen 4’s improvements over Zen 3. Denser L1i SRAM macros were used to give Zen 4 a larger L2 TLB, micro-op cache, and branch predictor structures. Beyond SRAM density, high density SRAM has become more capable. Performance critical applications like cache tags can use high density SRAM, improving area efficiency beyond what plain SRAM scaling would provide.

However, TSMC’s high density SRAM still has worse density than Intel’s. Raptor Lake uses the same SRAM type for L2 and L3 cache, and that provides just over 3 KB of storage per μm2. AMD’s area usage ends up being the opposite of Intel’s even though both manufacturers have 5 MB of L2 + L3 cache per core. While AMD uses a lot of area to implement cache, Intel puts more area into the core. The result is a complex picture that can’t be captured just by comparing core area.

Zen 5 is rumored to use TSMC’s newer 4 nm process. Improved density should enable all kinds of advancements as larger transistor budgets become feasible. I look forward to seeing what that brings.

If you like our articles and journalism, and you want to support us in our endeavors, then consider heading over to our Patreon or our PayPal if you want to toss a few bucks our way. If you would like to talk with the Chips and Cheese staff and the people behind the scenes, then consider joining our Discord.